Sensor Operator Training with MAK ONE

Sensor operators and aircraft pilots provide critical intelligence and support during complex, sometimes dangerous missions. To qualify, they must have in-depth control of the ground control station sensor system and the tactical prowess required to work as a team during missions with unpredictable complications.

To train, MAK offers flexible and powerful simulation products designed to address the range of Sensor-Operator and Pilot training requirements. Here are a few examples:

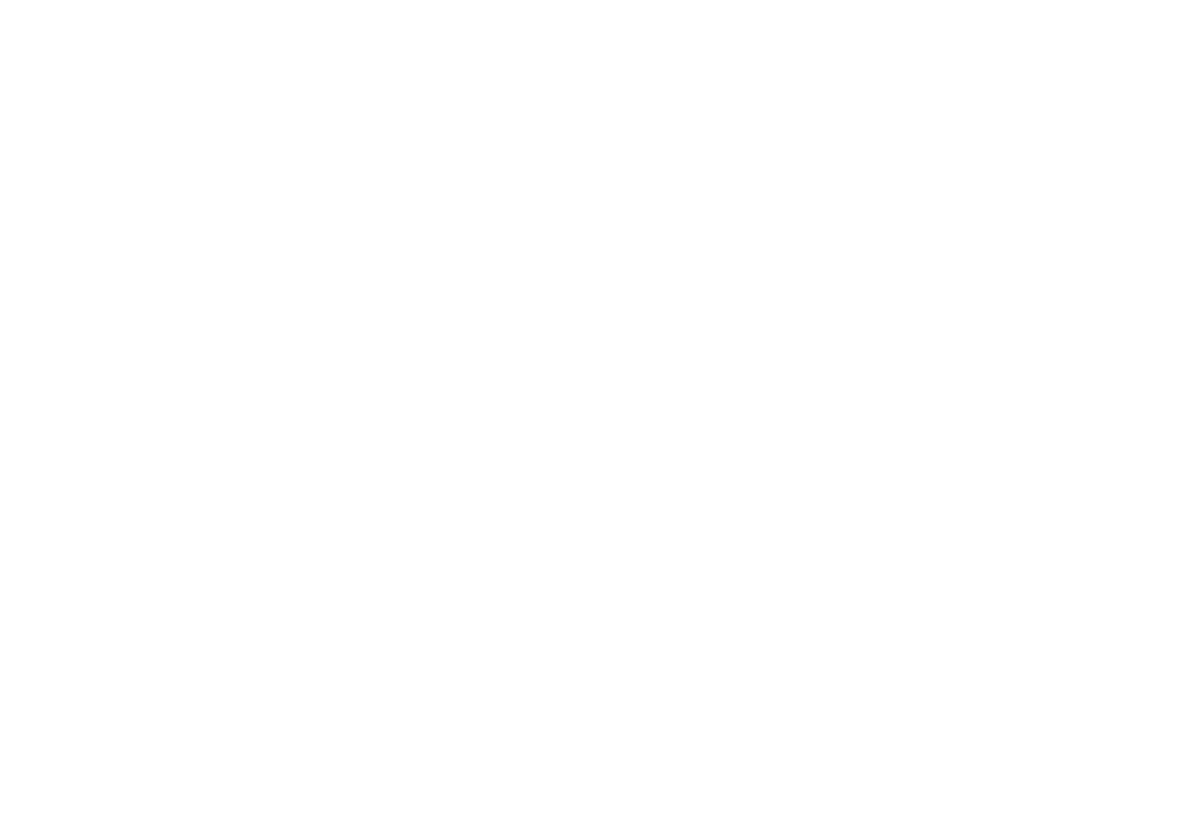

Use Case #1, Add ISR sensors to an existing training system:

Whether you want to add an airborne sensor asset to an existing exercise or to host a classroom full of beginner sensor operators, ![]() VR-Engage Sensor Operator offers a quick and easy way to integrate with simulation systems right out of the box as shown in Figure 1. Experienced participants can use it to provide intel from their payload vantage point and beginners can gain baseline training.

VR-Engage Sensor Operator offers a quick and easy way to integrate with simulation systems right out of the box as shown in Figure 1. Experienced participants can use it to provide intel from their payload vantage point and beginners can gain baseline training.

Figure 1: A Sensor Operator adds another view point to a JTAC system

All of MAK products are terrain- and protocol-agile, allowing you to leverage your existing capabilities while attaching a gimbaled sensor to any DIS or HLA entity in your existing simulation system.

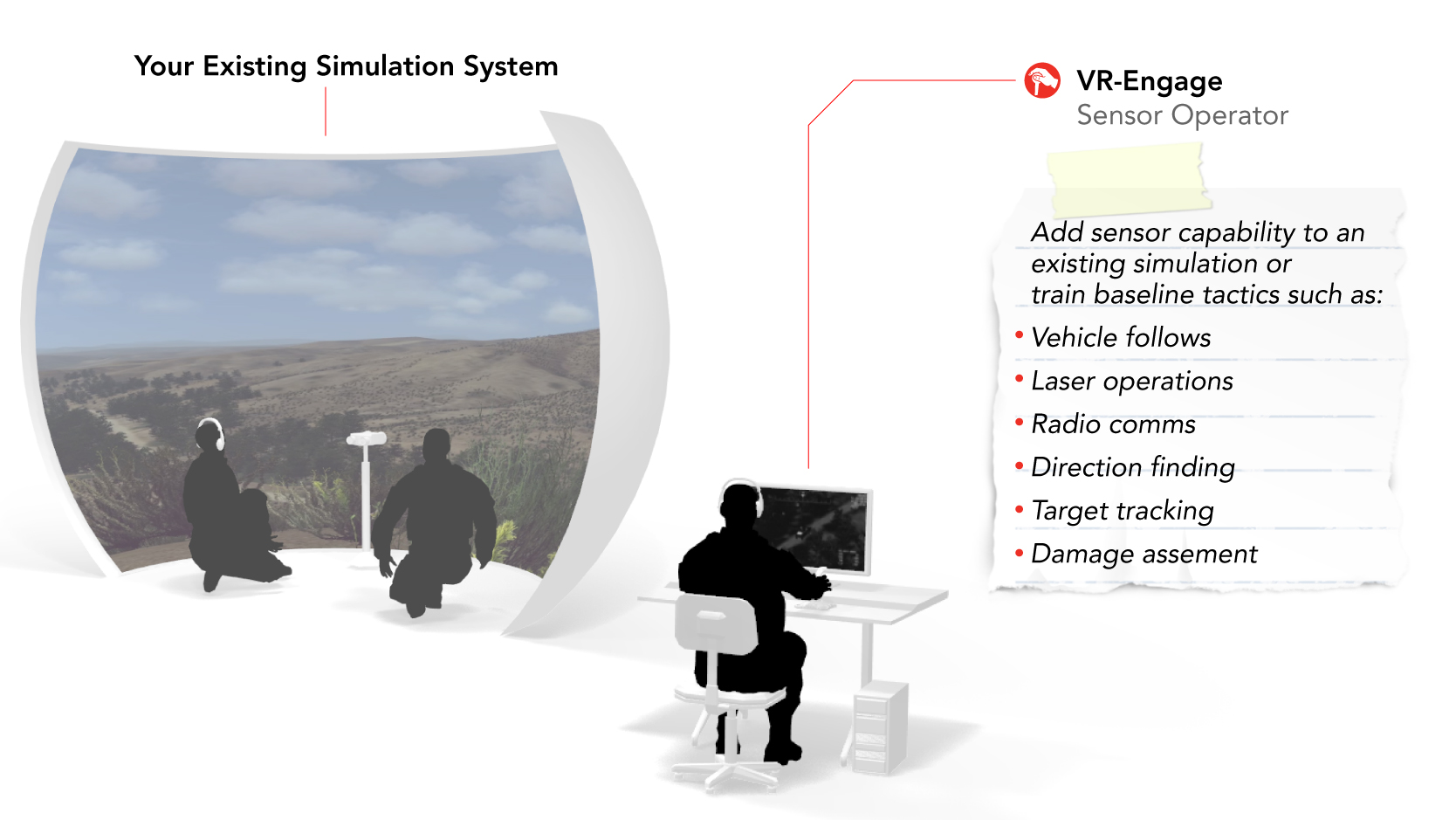

Use Case #2, Train in-depth system operation

Before operating a sensor system in the real world, operators need training for in-depth sensor system operation.

This training is done with the combination of ![]() VR-Forces as the simulation engine and

VR-Forces as the simulation engine and ![]() VR-Engage as the Sensor Operator role player station. VR-Forces is used to design scenarios that require the learning of essential skills in controlling the sensor gimbal. It provides a way to assign real-world Patterns Of Life and add specific behavioral patterns to human characters or crowds. Fill the world with intelligent, tactically significant characters (bad guys, civilians, and military personnel) to search for or track. Create targets, threats, triggers and events. VR-Forces is also the computer-assisted flight control for the sensor operator’s aircraft.

VR-Engage as the Sensor Operator role player station. VR-Forces is used to design scenarios that require the learning of essential skills in controlling the sensor gimbal. It provides a way to assign real-world Patterns Of Life and add specific behavioral patterns to human characters or crowds. Fill the world with intelligent, tactically significant characters (bad guys, civilians, and military personnel) to search for or track. Create targets, threats, triggers and events. VR-Forces is also the computer-assisted flight control for the sensor operator’s aircraft.

VR-Engage can be configured to use custom controllers and menu structures to mimic buttonology and emulate the physical gimbal controls. Adding ![]() SensorFX to VR-Engage will further enhance fidelity -- emulating the physics-based visuals so that they provide the same effects, enhancements, and optimizations as the actual sensor. In short, students can train on a replica of their system configuration.

SensorFX to VR-Engage will further enhance fidelity -- emulating the physics-based visuals so that they provide the same effects, enhancements, and optimizations as the actual sensor. In short, students can train on a replica of their system configuration.

Figure 2: Training to operate a particular sensor device

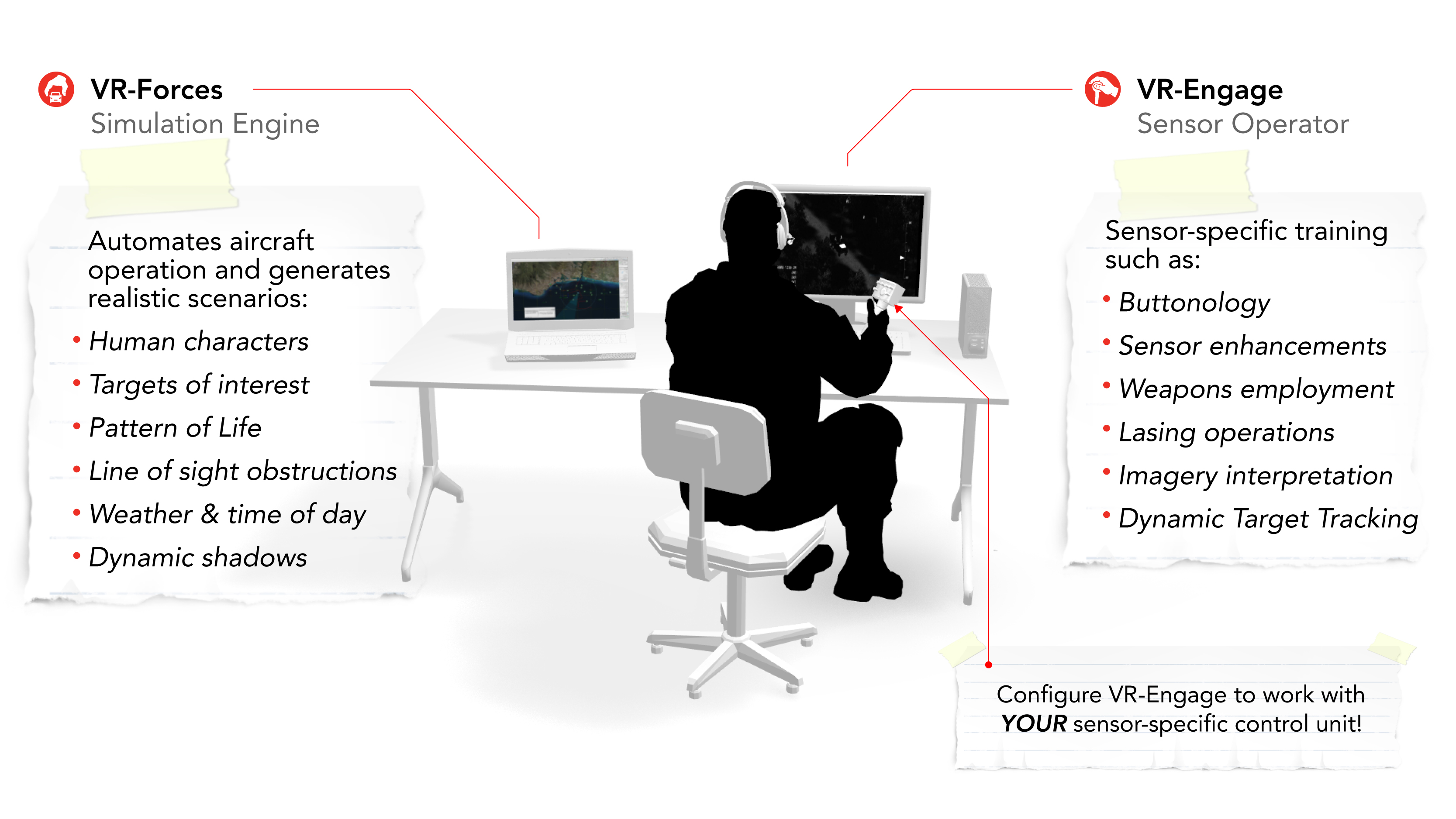

Use Case #3, Train the full airborne mission team

Before integrating with a larger mission, the Sensor and platform operators must learn to operate tactically as a Remote Piloted Aircraft unit.

These skills can be acquired while training side by side on a full-mission trainer. The stations in Figure 3 use combinations of VR-Forces and VR-Engage to fulfill the roles of the Instructor, the Pilot of the aircraft, and the Sensor Operator.

Pilot:

In the Pilot station, VR-Forces provides the Computer-Assisted flight control of the UAV.

Through the VR-Forces GUI’s 2D map interface, a user can task a UAV to fly to a specific waypoint or location, follow a route, fly a desired altitude or heading, orbit a point, and even point the sensor at a particular target or location (sometimes the pilot, rather than the Sensor Operator, will want to temporarily control the sensor). A user can also create waypoints, routes, and other control objects during flight. In addition, the VR-Forces GUI can show the footprint and frustum of the sensor to enhance situational awareness (in 2D and 3D).

VR-Engage provides manual control of the aircraft, including realistic aircraft dynamics and response to the environmental conditions. In this role, the Pilot can choose to see what the sensor sees, even share control with the Sensor Operator.

Sensor Operator:

VR-Engage provides the role of the Sensor Operator, letting the user manually control the gimbaled sensor on the platform. In this role, the Sensor Operator gains the required set of advanced skills and tactical training to become an integral part of the mission. They learn to acquire and track targets, prioritize mission-related warnings, updates, radio communications.

Instructor Station:

This is where the scenario design gets creative; the instructor can use VR-Forces to inject complexities into the scenario by using its advanced AI to create tactically significant behaviors in human characters or crowds. Tweak the clouds and fog, producing rain to change visibility. Increase wind and change its direction and even jam communications during runtime.

As students advance through full mission training they learn to support their crewmen in complex missions. They share salient information between each other, operate radios, and communicate with ground teams, rear-area commanders and other entities covering the target area.

Figure 3: Apply knowledge of systems, weapons and tactics to complete missions together

MAK products are well suited to Sensor Operator training. VR-Engage’s Sensor Operator role is ready to use and connect to existing training simulations, it can be configured and customized to emulate specific sensor controllers, and it can interoperate with the full capabilities of VR-Forces to form full mission trainers.

MAK products can be used for live, virtual and constructive training.

Reach out to us at