MAK ONE: The synthetic environment foundation for immersive Pilot Training

MAK Technologies has been a global leader in defense modeling and simulation since 1990. Our Cambridge, Massachusetts-based Product Team develops the comprehensive “MAK ONE” suite of Commercial off the Shelf (COTS) simulation and visualization engines. These flexible, mixed-reality-capable products provide an excellent Synthetic Environment foundation for an enhanced Immersive Training Device (ITD).

Our Orlando-based Training Solutions Team has experience on programs such as US Army Synthetic Training Environment (STE) Common Synthetic Environment (CSE), United Kingdom (UK) Air Force Gladiator, Aviation Combined Arms Tactical Trainer (AVCATT), and the UK Air Force F-35 Capabilities Concept Demonstrator. They have built extended-reality or mixed-reality pilot and multi-crew training devices, integrated with government-provided or emulated Operational Flight Plans (OFP), built training systems around such devices, and have deployed simulations both on-premise, and in the cloud. In many cases, our delivered solutions are built on our own MAK ONE platform, and we also have experience with game engines, government products, and 3rd party alternatives.

MAK is ready to prime or partner to serve the greater goal of Air Force pilot training.

Capabilities of the core COTS engines and Synthetic Environment components

The VR-Engage multi-role virtual simulator provides the basis for a flexible, mixed-reality-based immersive training device. VR-Engage (through its IG component, VR-Vantage) has built-in support for many Virtual Reality and Mixed Reality devices, including the Varjo XR3, VRgineers XTAL3, and HTC VIVE Pro. It can be configured to determine which elements of the real world to “bring” into the virtual world through a geometry mask, depth mask, trackers, or chromakey methods.

The MAK ONE suite's standard installation includes high-fidelity 3D models of cockpits, including the T-38C, demonstrating the mixed-reality capabilities of VR-Engage, with switches that cast shadows, buttons that can illuminate, and instruments and screens that look realistically reflective. Built-in “representative” virtual MFD displays, which include several active instruments rendered using DiSTI’s GL Studio libraries can be replaced by the actual video output from commercial or government-furnished OFPs – rendered as textures onto tagged locations in the virtual 3D cockpit model or rendered to dedicated physical displays and masked into the virtual scene using mixed reality cameras.

VR-Engage is built on a modular, open design. It allows users to supply their own 3D models to supplement or replace the pre-configured models (including cockpit interiors), can be tailored through plug-ins to use specific desired flight dynamics models (including 3rd party models that may run on an external machine), and has been demonstrated to support a wide variety of both fixed and rotary-wing aircraft. VR-Engage, its underlying IG (VR-Vantage), and its underlying simulation engine (VR-Forces) are mature and flexible COTS products that have been used on a variety of programs around the world. The software is lightweight – it can be downloaded by authorized users from our website; installed through a standard Windows or Linux installer on any laptop, desktop, or virtual machine with an appropriate NVIDIA graphics card; and can scale from a simple desktop deployment with a game controller and monitor, to a mixed-reality deployment with custom cockpit hardware elements, to an edge-blended multi-channel dome display.

Our Synthetic Environment software natively loads terrain in a wide variety of standard formats – including CDB, glTF/3D Tiles, OpenFlight, direct-from-source (e.g. DTED, GeoTIFF, shapefiles, and OpenStreetMap), or from VR-TheWorld Server through standard streaming and web mapping protocols such as Web Mapping Service (WMS), Web Features Service (WFS), and Web Map Tile Service (WMTS). The engines simulate and visualize a wide variety of weather conditions, including localized weather, volumetric clouds, and procedurally-generated snow based on elevation, accumulation, and other factors. Weather and terrain affect line of sight, detection, sensor displays, and the mixed-reality scene.

3D models are loaded natively in a variety of standard formats, such as FBX, OpenFlight, and Collada, and our flexible plug-in-driven model-loading module is well-positioned to support the required Universal Scene Description format.

Examples of systems built with MAK ONE:

Royal Air Force Capability Concept Demonstrator: This project combines virtual reality and simulation technology to enhance training for the Royal Air Force (RAF). The F35 capability concept demonstrator allows flight students to experience an immersive synthetic training environment, built with MAK ONE, where they can safely practice procedures and tactics.

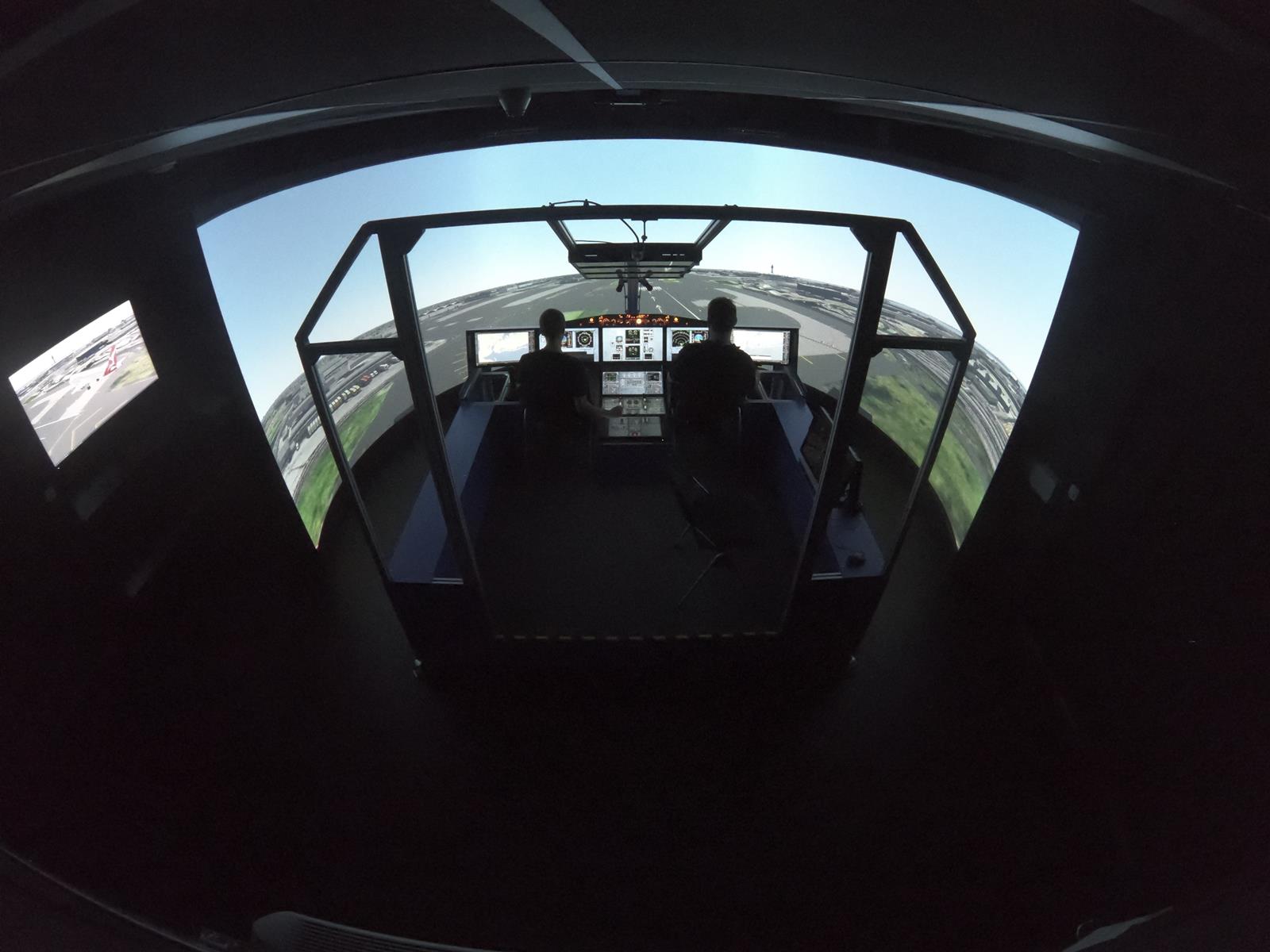

ONERA Flight Simulator: The French aerospace lab’s flight simulator uses MAK ONE, including VR-Vantage, VR-Engage, VR-Forces, and more. ONERA conducts cognitive engineering activities for the development of new Human/System Interaction (HSI) concepts for managing complex systems or operations; simulation is an essential way for human operators to experience new situations safely.

An integrated enhanced Immersive Training Device

MAK’s Training Solutions Team stands ready to customize the foundational COTS Synthetic Environment to meet the specific needs of air training projects.

Our team has experience integrating COTS and GOTS flight dynamics models and OFP software and hardware with the core MAK ONE engines. On the US Army STE CSE program, MAK integrated the MAK ONE VR-Vantage IG and VR-Engage virtual simulator application with the Original Equipment Manufacture (OEM), Boeing OFP, for an Apache helicopter, which had been made “simulation-ready” under the prior AVCATT program. For the Blackhawk and Chinook, we integrated with an emulated OFP that had been developed by Army Aviation and Missile Research, Development and Engineering Center (AMRDEC). MAK replaced the built-in “representative” flight-dynamics model with 3rd-party validated commercial models from Advanced Rotorcraft Technologies - using the MAK ONE Application Program Interfaces (APIs) to build a plug-in that could talk to the model running on a separate Linux virtual machine. On this program, MAK used the Varjo XR mixed-reality headset, integrated with a prototype version of the RVCT hardware from Cole Engineering through a Hardware Abstraction Layer API, including high-fidelity control-loaded input devices.

Supporting elements of a complete training system

To support training requirements for formation flight, tactical maneuvers, and engagement of targets, MAK’s VR-Forces CGF and simulation execution engine can generate realistic battlefield scenarios by populating the synthetic environment with friendly and hostile AI forces - in the air, at sea, and on the ground. The VR-Forces Graphical User Interface (GUI) serves as the run-time IOS and scenario authoring tool – allowing unified laydown and tasking of both the trainees’ ownship entity and the AI-controlled CGF entities. The VR-Vantage Plan View Display and 3D Viewer have been used to support virtual sand tables, and the MAK Data Logger is often used to export recorded scenario data for analysis by external performance assessment tools.

The MAK ONE products have been deployed and demonstrated in the kind of hybrid cloud/on-premise architecture used in the PTT Digital Backbone. Our open APIs, modular design, and experience assisting COTS and Training Solutions customers on various projects for decades allow us to work with anyone to integrate immersive training devices with required external elements.

Relevant Projects and Past Performance

UK Air Force Gladiator Program (aka DOT(C)-Air Core Systems and Services)

The UK’s Ministry of Defence selected Boeing Defence UK to provide a virtual system that will allow Royal Air Force (RAF) crews and supporting forces to train together in multiple locations. The capability, known as Gladiator, is based entirely on the MAK ONE Synthetic Environment software, which generates and runs scenarios with realistic threats and pattern of life activity; provides a visualization capability during exercises and debrief; and delivers pilot, sensor, and other role player stations. Third-party suppliers have used our open APIs to add high-fidelity flight dynamics, sensors, electronic warfare, and Tactical Data Link models to the system. Separate UK contracts are funding integrators to build platform-specific flight simulators on the common MAK-ONE-based Gladiator platform, demonstrating its flexibility and open architecture.

US Army Synthetic Training Environment (STE) Common Synthetic Environment (CSE)

In 2019, the U.S. Army awarded an Other Transaction Agreement (OTA) to MAK to prototype a unified simulation platform for the Army’s STE. The STE envisioned a single unified system available to all training audiences at the point of need, using portable and reconfigurable hardware, and connected to Network Enabled Mission Command systems, operational networks, and live training instrumentation. Under the STE CSE OTA, MAK was both the engine provider and the integrator, building prototype multi-crew training devices for aircraft as well as ground vehicles. Under this program, MAK implemented support for MAXAR’s One World Terrain using the glTF/3D Tiles format (proving our ability to quickly implement new content file formats), and integrated with several mixed reality devices, including the Varjo XR3.